Combine and shuffle data: Positive and negative samples are combined into feature vectors (X) and corresponding labels (y). The data is shuffled to ensure a balanced distribution during training:

Combine and shuffle the data

X = np.vstack([positive_X, negative_X])

y = np.concatenate([positive_y, negative_y])

Split data into training and testing sets: The data is split into training and testing sets using the train_test_split function from scikit-learn:

Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, \

test_size=0.2, random_state=42)

Train the voice classifier: A VoiceClassifier class is defined, encapsulating the random forest model. An instance of the VoiceClassifier is created, and the model is trained using the positive and negative training data:

Train the voice classifier model

voice_classifier = VoiceClassifier()

voice_classifier.train(X_train, y_train)

Make predictions: The trained model predicts labels for the test set:

Make predictions on the test set

predictions = voice_classifier.predict(X_test)

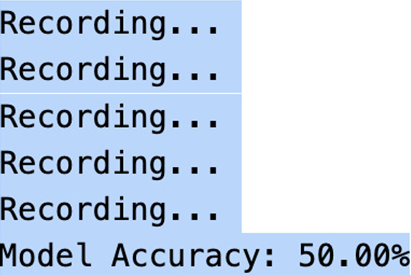

Evaluate model accuracy: The accuracy of the model is evaluated using scikit-learn’s accuracy_score function, comparing predicted labels with actual labels:

Evaluate the model

accuracy = accuracy_score(y_test, predictions)

print(f”Model Accuracy: {accuracy * 100:.2f}%”)

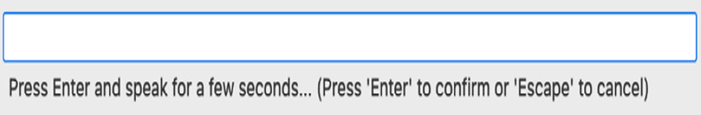

When you run this code, it prompts with the following pop-up window to enter and speak.

Figure 11.1 – Prompt to start speaking

Then, you speak a few sentences that will be recorded:

Figure 11.2 – Trained model accuracy

Inference: Let’s see the practical application of a pre-trained voice classification model for real-time voice inference. Leveraging the scikit-learn library’s RandomForestClassifier, the model was previously trained to discern between positive samples (voice) and negative samples (non-voice or background noise).

The primary objective of this script is to demonstrate the seamless integration of the pre-trained voice classification model into a real-time voice inference system. You are prompted to provide audio input by pressing Enter and speaking for a few seconds, after which the model predicts whether the input contains human speech or non-voice elements:

import joblib

Save the trained model during training

joblib.dump(voice_classifier, “voice_classifier_model.pkl”)

import numpy as np

import sounddevice as sd

from sklearn.ensemble import RandomForestClassifier

from sklearn.externals import joblib For model persistence

Load the pre-trained model

voice_classifier = joblib.load(“voice_classifier_model.pkl”)

Function to capture real-time audio

def capture_audio(duration=5, sampling_rate=44100):

print(“Recording…”)

audio_data = sd.rec(int(sampling_rate * duration), \

samplerate=sampling_rate, channels=1, dtype=’int16′)

sd.wait()

return audio_data.flatten()

Function to predict voice using the trained model

def predict_voice(audio_sample):

prediction = voice_classifier.predict([audio_sample])

return prediction[0]

Main program for real-time voice classification

def real_time_voice_classification():

while True:

input(“Press Enter and speak for a few seconds…”)

Capture new audio

new_audio_sample = capture_audio()

Predict if it’s voice or non-voice

result = predict_voice(new_audio_sample)

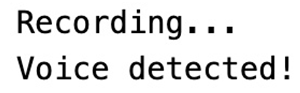

if result == 1:

print(“Voice detected!”)

else:

print(“Non-voice detected.”)

if __name__ == “__main__”:

real_time_voice_classification()

The output is as follows:

Figure 11.3 – Inference output

In a similar manner, we can use this model to label a voice as male or female to analyze customer calls and understand target customers.

We have seen the real-time voice classification inference that holds significant relevance in numerous scenarios, including voice-activated applications, security systems, and communication devices. By loading a pre-trained model, users can experience the instantaneous and accurate classification of voice in real-world situations.

Whether applied to enhance accessibility features, automate voice commands, or implement voice-based security protocols, this script serves as a practical example of deploying machine learning models for voice classification in real-time scenarios. As technology continues to advance, the seamless integration of voice inference models contributes to the evolution of user-friendly and responsive applications across various domains.

Now, let’s see how to transcribe audio using the OpenAI Whisper model.