By using this noise-augmented audio data, the model accuracy increased from 0.946 to 0.964. Depending on the data, we can apply data augmentation and test the accuracy to decide whether data augmentation is required.

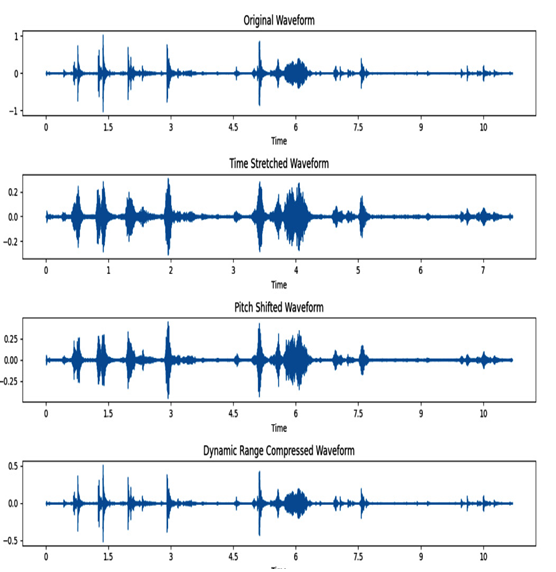

Let’s see three more data augmentation techniques applied to an original audio file – time-stretching, pitch-shifting, and dynamic range compression.

The following Python script employs the librosa library for audio processing, loading an initial audio file that serves as the baseline for augmentation. Subsequently, functions are defined to apply each augmentation technique independently. Time-stretching alters the temporal duration of the audio, pitch-shifting modifies the pitch without affecting speed, and dynamic range compression adjusts the volume dynamics.

The augmented waveforms are visually presented side by side with the original waveform using Matplotlib. This visualization aids in understanding the transformative impact of each augmentation technique on the audio data. Through this script, you will gain insights into the practical implementation of audio augmentation, a valuable practice for creating diverse and robust datasets for machine learning models.

As audio data labeling becomes increasingly integral to various applications, mastering the art of augmentation ensures the generation of comprehensive datasets, thereby enhancing the effectiveness of machine learning models. Whether applied to speech recognition, sound classification, or voice-enabled applications, audio augmentation is a powerful technique for refining and enriching audio datasets:

import librosa

import librosa.display

import numpy as np

import matplotlib.pyplot as plt

from scipy.io.wavfile import write

Load the audio file

audio_file_path = “../ch10/cats_dogs/cat_1.wav”

y, sr = librosa.load(audio_file_path)

Function for time stretching augmentation

def time_stretching(y, rate):

return librosa.effects.time_stretch(y, rate=rate)

Function for pitch shifting augmentation

def pitch_shifting(y, sr, pitch_factor):

return librosa.effects.pitch_shift(y, sr=sr, n_steps=pitch_factor)

Function for dynamic range compression augmentation

def dynamic_range_compression(y, compression_factor):

return y * compression_factor

Apply dynamic range compression augmentation

compression_factor = 0.5 Adjust as needed

y_compressed = dynamic_range_compression(y, compression_factor)

Apply time stretching augmentation

y_stretched = time_stretching(y, rate=1.5)

Apply pitch shifting augmentation

y_pitch_shifted = pitch_shifting(y, sr=sr, pitch_factor=3)

Display the original and augmented waveforms

plt.figure(figsize=(12, 8))

plt.subplot(4, 1, 1)

librosa.display.waveshow(y, sr=sr)

plt.title(‘Original Waveform’)

plt.subplot(4, 1, 2)

librosa.display.waveshow(y_stretched, sr=sr)

plt.title(‘Time Stretched Waveform’)

plt.subplot(4, 1, 3)

librosa.display.waveshow(y_pitch_shifted, sr=sr)

plt.title(‘Pitch Shifted Waveform’)

plt.subplot(4, 1, 4)

librosa.display.waveshow(y_compressed, sr=sr)

plt.title(‘Dynamic Range Compressed Waveform’)

plt.tight_layout()

plt.show()

Here is the output:

Figure 11.10 – Data augmentation techniques – time stretching, pitch shifting, and dynamic range compression

Now, let’s move on to another interesting topic in labeling audio data in the next section.

Introducing Azure Cognitive Services – the speech service

Azure Cognitive Services offers a comprehensive set of speech-related services that empower developers to integrate powerful speech capabilities into their applications. Some key speech services available in Azure AI include the following:

- Speech-to-text (speech recognition): This converts spoken language into written text, enabling applications to transcribe audio content such as voice commands, interviews, or conversations.

- Speech Translation: This translates spoken language into another language in real time, facilitating multilingual communication. This service is valuable for applications requiring language translation for global audiences.

These Azure Cognitive Services speech capabilities cater to a diverse range of applications, from accessibility features and voice-enabled applications to multilingual communication and personalized user experiences. Developers can leverage these services to enhance the functionality and accessibility of their applications through seamless integration of speech-related features.